Kubernetes and Grove for beginners¶

Components¶

Welcome to the Kubernetes and Grove for beginners guide. In this guide, we will take a brief tour of Kubernetes, Tutor and Grove and how they work together to deploy the Open edX platform. If you have a decent knowledge of Kubernetes and Tutor feel free to skip this guide and move on to the next section.

For the rest, let's start with the absolute basics.

Docker¶

Docker builds the containers that Kubernetes consumes. To quote the Docker site:

A container is a standard unit of software that packages up code and all its dependencies so the application runs quickly and reliably from one computing environment to another. A Docker container image is a lightweight, standalone, executable package of software that includes everything needed to run an application: code, runtime, system tools, system libraries and settings.

In other words, a container encapsulates a complete system. So once you've created a container, it will run the same everywhere. This allows packaging and deploying your app without the consumer needing anything other than Docker running on their system.

Kubernetes¶

Kubernetes is an Open Source container orchestration automation platform. Using yaml configuration, it can deploy your containers and has a well defined system for configuring the interactions between your containers, allowing your apps to be deployed to any Kubernetes provider with minimal changes required.

Horizontal Scaling is provided out the box. If you're using it on a cloud provider, you don't have to worry about provisioning new machines or storage. Everything is handled for you automatically.

Everything within Kubernetes is an object. From the k8s documentation:

Kubernetes objects are persistent entities in the Kubernetes system. Kubernetes uses these entities to represent the state of your cluster. Specifically, they can describe: ... A Kubernetes object is a "record of intent"--once you create the object, the Kubernetes system will constantly work to ensure that object exists. By creating an object, you're effectively telling the Kubernetes system what you want your cluster's workload to look like; this is your cluster's desired state. ... Here's an example .yaml file that shows the required fields and object spec for a Kubernetes Deployment:

apiVersion: apps/v1 kind: Deployment metadata: name: nginx-deployment spec: selector: matchLabels: app: nginx replicas: 2 # tells deployment to run 2 pods matching the template template: metadata: labels: app: nginx spec: containers: - name: nginx image: nginx:1.14.2 ports: - containerPort: 80

Kubernetes is a complex platform with an insane amount of toggles and switches, Grove only deals with a subset of these, but to work with this subset you'll need to understand some terminology.

Kubernetes terms¶

- Kubernetes cluster

- A Kubernetes cluster is a set of nodes that run containerized applications.

- Node

- A node is a worker machine in Kubernetes. In AWS terms it would be an EC2 instance or just a VPS. The physical hardware the cluster is running on.

- Node Pool

- A group of nodes within a cluster that all have the same configuration. You can have multiple machines of different configurations on your Kubernetes cluster. A node pool would group those nodes together. For example, you could have two node pools in your cluster. One containing machines with a more powerful CPU and another with more powerful GPUs. Is it then possible to assign pods to nodes that fit their required workload.

- Pod

- The smallest and simplest Kubernetes object. A Pod represents a set of running containers on your cluster. A

podis one or many containers doing the work in the cluster. For instance, when doing an Open edX deployment there will be a pod that runs theLMS, another for theCMS,redis, and so on. - Namespace

- Namespaces provide an abstraction that provides a way to divide a physical cluster into multiple virtual clusters. All pods within a namespace can communicate with each other similar to

docker-compose. Grove, withtutor's help, organizes each Open edX into it's own namespace. You can view namespaces by running./kubectl get namespaces. - Deleting a namespace deletes all resources attached to it.

- Deployment

- An API object that manages a replicated application, typically by running Pods with no local state.

- A deployment defines how pods are to be created. tutor's deployments file contains the definition of containers that will need to run for an Open edX instance. To view deployments in a project run

./kubectl get deployments - ReplicaSet

- A ReplicaSet (aims to) maintain a set of replica Pods running at any given time. In a deployment you can set the number of replicas, ie. the number of pods to run by default. Since you might need more pods for

horizontal scalingneeds. - Job

- A finite or batch task that runs to completion. Jobs are pods that run once then exit. They're used to do things like run migrations or check if a service is up, before starting a deployment.

- Volume

- A directory containing data, accessible to the containers in a Pod. Containers are ephemeral, which means they don't have their own storage. To enable permanent storage you would create a

Persistent Volumeand mount it inside the pod.

Tutor¶

Tutor is the officially blessed next generation deployment tool for the edx-platform. Tutor is an active project with a ton of community involvement.

Tutor has tools to assist with building the containers for Open edX and related projects. It also makes local development substantially easier than using the traditional devstack.

We won't delve into tutor too deeply as it's documentation is quite good already. Check it out at https://docs.tutor.overhang.io/gettingstarted.html!

Grove¶

Grove uses Kubernetes and Tutor to make deploying Open edX to Kubernetes easier. To use these tools effectively, a lot of setup and configuration are required. Grove provides a layer on these so that operators don't need to deal with (or even know) the peculiarities of these technologies to deploy their Open edX instances.

The Grove root directory¶

We've gone through the main technologies that are used in Grove. In this section, we'll dive deeper and go over the Grove code in short.

The directory layout of Grove is as follows:

- CHANGELOG.md

- Contains the CHANGELOG for Grove. We compile all the commits and changes every month when we make a new release.

- cliff.toml

- To make generating the changelog from commits easier, git-cliff is used.

- commitlint.config.js

- Configuration file for commitlint. In Grove only commits adhering to the conventional commits format are allowed.

- CONTRIBUTING.md

- Notes on contributing to the project.

- docs

- Contains the markdown files to generate the documentation on grove.opencraft.com. MkDocs is used to compile the documentation.

- functions

- Any OpenFaas functions will be found here.

- .gitlab

- Gitlab configuration for the project. Contains the templates for creating issues and merge requests.

- gitlab-ci

- This directory contains the CI configuration for any project based on

grove-template. All pipelines are defined here and projected forked fromgrove-templatewill link to this directory for any of their Gitlab pipelines. - .gitlab-ci.yml

- Configuration of gitlab pipelines that run within Grove, like validating commits.

- LICENSE

- AGPL

- .markdownlint.yml

- Linting to the check that markdown within the

docsfolder is consistent. - mkdocs.yml

- Configuration file for the site docs.

- provider-aws

- Terraform code for the AWS provider. If you're deploying to AWS, the code in the module will be used the root.

- provider-digitalocean

- Terraform code for the Digital Ocean provider. If you're deploying to AWS, the code in the module will be used the root.

- provider-modules

- Shared Terraform code. If code can be shared between the different providers, it is placed in a module in this folder and referenced from the provider. For example,

k8s-ingress, contains the instructions for deploying the Nginx container. - tools-container

- The Grove CLI. All Grove commands run within a container so that the user doesn't need to have specific tools installed on their system (like

terraform). All the required code to create this container, include the Grove CLI are within this directory. This directory is discussed further in the next section. - wrapper-script.rb

- The main script that invokes the tools container. When you run a Grove command it actually runs this script, which in turn takes your command and translates it to a command to run within Docker

The Grove Tools Container¶

Grove uses a Docker image named the tools-container to invoke any command required. With this approach, the user has no need to install anything on their system beside Docker. Once Grove is set up you can run commands from within your cluster's control directory. Eg.

./tf planto run aterraform plancommand for your infrastructure../kubectl get podswill retrieve a list of pods.

When you run any of these commands it invokes the Ruby script, wrapper-script.rb. wrapper-script.rb then looks at the command and translates it to a command that will run within the Docker container. ./tf plan for instance becomes

cd /workspace/grove/provider-aws && terraform plan

Note

The repo root is mounted in the tools-container under /workspace within the docker container started by the wrapper script.

The CLI Discovery contains the rationale on how this came about.

Deploying a new cluster¶

Deploying a cluster from scratch is a bit tedious, but it only needs to be done once. The full process is documented in the Getting Started section. For brevity, we summarize it below.

- Fork the

grove-templaterepo into your Gitlab Group and add a name for your cluster. - Create a new Kubernetes Agent in you Gitlab fork.

- Get the relevant API keys for your provider, Either AWS or Digital Ocean.

- If on AWS, get the API keys for MongoDB Atlas as well.

- Create a deploy token on Gitlab.

- Create a personal access token on Gitlab.

- Add all the above into the CI/CD settings in your

grove-templatefork. - Add all the above into private.yml in your repo

- Run

./tf plan && ./tf applyfrom thecontroldirectory in your fork to provision the infrastructure. - Set up a DNS record for your cluster.

- Add an instance by running the Instance Create pipeline or

./grove new [instance-name]. - Run

./tf plan && ./tf applyagain to create the infrastructure (mostly databases) for your new instance. - Use the Update instance pipeline to deploy your instance.

- The pipeline is preferred, because you need to run multiple steps to deploy, which locally is error-prone.

Using commit-based pipelines¶

Grove works with commit-based pipelines. You can invoke them either by having your commit in a certain format or calling a pipeline trigger.

Assume you've created an instance named "unladen-swallow", to deploy and updated version of your changes you would commit your changes with the message "[AutoDeploy][Update] unladen-swallow|1" and push your changes to run the pipeline.

Pipelines are fully documented in this section of the docs.

Using Pipeline triggers¶

The alternative (and in some cases necessary) method to is to trigger the pipelines via the REST API.

Using curl a pipeline trigger has the format:

curl -X POST \

--fail \

-F token=TOKEN \

-F "ref={branch}" \

https://gitlab.com/api/v4/projects/{project_id}/trigger/pipeline

For example, to update the existing instance unladen-swallow:

curl -X POST \

-F token=your-secret-trigger-token \

-F "ref=main" \

-F "variables[INSTANCE_NAME]=unladen-swallow" \

-F "variables[DEPLOYMENT_REQUEST_ID]=1" \

-F "variables[NEW_INSTANCE_TRIGGER]=1" \

https://gitlab.com/api/v4/projects/34602668/trigger/pipeline

The pipelines are all described under the Pipelines section.

What goes into a deployment¶

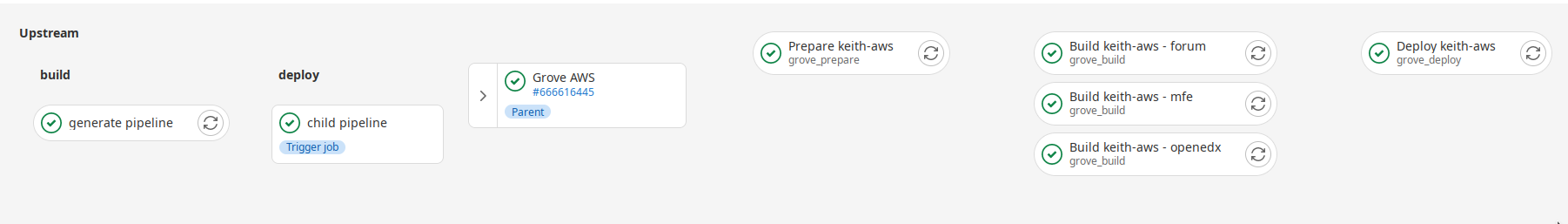

Consider this deployment pipeline (click on the image to get a larger version):

We'll consider each of the steps to give an overview of what happens when an edX instance is deployed.

Note

Gitlab pipelines are run in stages. By default pipelines have the build, test and deploy

stages. For this pipeline we haven't modified these stages.

generate pipeline¶

This is part of the build step. The pipeline runs the command grove pipeline [your-commit-message] then grove generates

extra yaml to determine the next step. In this case, the commit message was [AutoDeploy][Update] openedx-aws|1 so grove

created a yaml file deploy and update the infrastructure.

The generated yaml file (it's called generated.yml in the code) is then passed onto a child pipeline which will process

it as it's own pipeline.

child pipeline¶

This is just to show that there was a child pipeline that ran. It can be expanded to show further details.

prepare openedx-aws¶

In this stage, the instances configuration is updated. In short, it runs tutor config save with the instance's

config.yml so that any configuration changes are persisted for the next step. Which is...

Building images¶

In this stage images are built. Grove will instruct tutor to build all the images required for your deployment

and push them to the Gitlab Container registry. The images will be used in the final deploy step.

We build three images by default:

forumcourtesy of the tutor-forum plugin to enable the discussion forums.mfecompiles a container for every MFE that's part of your app. tutor-mfe is responsible for this part.openedxbuilds an Open edX image which is used for the LMS, CMS, etc.

Deploy¶

In this stage the containers built in the previous step are deployed. If the container or configuration hasn't changed, then the running container will remain as is. For example, if you haven't made any changes that affect the redis container, it will remain running without any changes.

tutor runs the necessary kubernetes commands to deploy the resources to your cluster. For each Open edX instance deployed we have:

- CMS pods for your Studio backend

- LMS pods for the LMS backend

- Celery worker pods for each of the above

- An MFE pods that will handle requests to your MFEs

- A

forumpod for the course discussion forums - Redis for all the

celerythings - Elasticsearch for all the search things

- A Caddy container that should direct traffic to your LMS/CMS (this isn't actually needed for Grove and we should get rid of it, but can't)

- And, depending on your configuration, a celery-beat pod connected to Redis

Debugging¶

Running kubectl on your local machine¶

If you have kubectl installed already, it can be faster to run it on your local machine than through the tools-container.

Copy/pasting commands off the internet is also much easier.

kubectl looks at the KUBECONFIG environment variable to determine the cluster it's looking at. In order to connected to

a grove cluster you would need to export the kube-config file and point kubectl to it.

To do so, you can run these from the control directory:

./tf output -raw kubeconfig > ../kubeconfig-private.yml

cd ..

export KUBECONFIG=$(pwd)/kubeconfig-private.yml

If you're using the AWS provider, both the AWCS CLI and a valid AWS profile is required.

Working with pods¶

- List pods in all namespaces:

$ ./kubectl get pods --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

gitlab-kubernetes-agent gitlab-gitlab-agent-589d9cc55-jh9gz 1/1 Running 0 9d

openedx-aws caddy-5b598c8b-wdjtx 1/1 Running 0 7h20m

openedx-aws cms-5b9f5f6748-9gbsn 1/1 Running 0 7h20m

openedx-aws cms-worker-749bb5485d-ppgxx 1/1 Running 0 7h20m

- List pods for your instance:

./kubectl get pods --namespace {namespace}

$ ./kubectl get pods --namespace openedx-aws

NAME READY STATUS RESTARTS AGE

caddy-5b598c8b-wdjtx 1/1 Running 0 7h21m

cms-5b9f5f6748-9gbsn 1/1 Running 0 7h21m

cms-worker-749bb5485d-ppgxx 1/1 Running 0 7h21m

elasticsearch-5b44c5449c-jln2z 1/1 Running 0 7h21m

forum-7b88b57f6c-mh6fp 1/1 Running 0 7h21m

lms-545499b657-rjtw8 1/1 Running 0 7h21m

lms-worker-85b6cb5c7c-mr2tm 1/1 Running 0 7h21m

mfe-6dd6b9648-zvgql 1/1 Running 0 7h21m

redis-5798cb9bd4-bkhl8 1/1 Running 0 7h21m

- Get a bash shell in the pod:

kubectl exec -it --namespace {namespace} {pod-name} -- bash

$ ./kubectl exec -it --namespace openedx-aws cms-worker-749bb5485d-ppgxx -- bash

app@cms-worker-749bb5485d-ppgxx:~/edx-platform$

Logs¶

Pods are the only useful entity to get logs for. They can be retrieved with the kubectl logs command.

$ ./kubectl logs --namespace openedx-aws cms-worker-749bb5485d-ppgxx

-------------- celery@edx.cms.core.default.%cms-worker-749bb5485d-ppgxx v5.2.6 (dawn-chorus)

--- ***** -----

-- ******* ---- Linux-5.4.209-116.367.amzn2.x86_64-x86_64-with-glibc2.29 2022-10-14 06:53:52

- *** --- * ---

- ** ---------- [config]

- ** ---------- .> app: proj:0x7fe4af418640

- ** ---------- .> transport: redis://redis:6379/0

Following

Add the -f flag to enable follow mode to tail the logs, eg. kubect logs -f pods {podname}

Describing pods¶

If a pod failed to start in the first place, there might not be any logs to view. That's where

the kubectl describe command helps. kubectl describe can be used with any kubernetes object

to view it's configuration.

Output

$ ./kubectl describe --namespace openedx-aws pod lms-545499b657-rjtw8

Name: lms-545499b657-rjtw8

Namespace: openedx-aws

Priority: 0

Service Account: default

Node: ip-10-0-3-236.ec2.internal/10.0.3.236

Start Time: Fri, 14 Oct 2022 08:53:29 +0200

Labels: app.kubernetes.io/instance=openedx-openedx-aws

app.kubernetes.io/managed-by=tutor

app.kubernetes.io/name=lms

app.kubernetes.io/part-of=openedx

date=20221014-065327

pod-template-hash=545499b657

Annotations: app.kubernetes.io/version: 14.0.5

kubernetes.io/psp: eks.privileged

Status: Running

IP: 10.0.3.151

IPs:

IP: 10.0.3.151

Controlled By: ReplicaSet/lms-545499b657

Containers:

lms:

Container ID: docker://44ed0de728707d8d92b4927b6b58cbdec02163edc690177f3e7f09d847ceaf80

Image: registry.gitlab.com/opencraft/grove-aws/openedx-aws/openedx:latest

Image ID: docker-pullable://registry.gitlab.com/opencraft/grove-aws/openedx-aws/openedx@sha256:ab50f76c58477b44ee29bfaf6c6c6319e6a3aff0ed7118d77131352265368151

Port: 8000/TCP

Host Port: 0/TCP

State: Running

Started: Fri, 14 Oct 2022 08:53:31 +0200

Ready: True

Restart Count: 0

Limits:

cpu: 2

memory: 1037Mi

Requests:

cpu: 100m

memory: 768Mi

Environment:

SERVICE_VARIANT: lms

DJANGO_SETTINGS_MODULE: lms.envs.tutor.production

Mounts:

/openedx/config from config (rw)

/openedx/edx-platform/cms/envs/tutor/ from settings-cms (rw)

/openedx/edx-platform/lms/envs/tutor/ from settings-lms (rw)

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-q5zrd (ro)

Conditions:

Type Status

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

settings-lms:

Type: ConfigMap (a volume populated by a ConfigMap)

Name: openedx-settings-lms-2ctbhkd7mg

Optional: false

settings-cms:

Type: ConfigMap (a volume populated by a ConfigMap)

Name: openedx-settings-cms-62bdk54mtc

Optional: false

config:

Type: ConfigMap (a volume populated by a ConfigMap)

Name: openedx-config-546d99c6bf

Optional: false

kube-api-access-q5zrd:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

ConfigMapOptional: <nil>

DownwardAPI: true

QoS Class: Burstable

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events: <none>

Restarting a pod¶

There is no command to restart a pod. However, you can force a restart by killing the main process.

This can be achieved by delete the pod in question and it will be recreated by the deployment. The command to delete is kubectl delete -n{namespace} {pod-name}.

Otherwise, you can kill the main process in your pod with kubectl exec -n{namespace} -it {pod} -- kill 1 to kill the main process in your pod. This will also force a restart of the container.

Retrieving events¶

Anytime something happens to your containers within the Kubernetes cluster, an event gets created. For example, if a pod is OOMKilled it will show up within this list. To view a list of events ordered by the created time, run this:

kubectl get events --all-namespaces --sort-by='.metadata.creationTimestamp'